The purpose of this section is to study two basic types of objects that form part of the model of a random experiment. Essential prerequisites for this section are set theory, functions, and cardinality (in particular, the distinction between countable and uncountable sets). measurable spaces also play a fundamental role, but if you are a new student of probability, just ignore the measure-theoretic terminology and skip the technical details.

Recall that in a random experiment, the outcome cannot be predicted with certainty, before the experiment is run. On the other hand, we assume that we can identify a fixed set \( S \) that includes all possible outcomes of a random experiment. This set plays the role of the universal set when modeling the experiment. For simple experiments, \( S \) may be precisely the set of possible outcomes. More often, for complex experiments, \( S \) is a mathematically convenient set that includes the possible outcomes and perhaps other elements as well. For example, if the experiment is to throw a standard die and record the score that occurs, we would let \(S = \{1, 2, 3, 4, 5, 6\}\), the set of possible outcomes. On the other hand, if the experiment is to capture a cicada and measure its body weight (in milligrams), we might conveniently take \(S = [0, \infty)\), even though most elements of this set are impossible (we hope!). The problem is that we may not know exactly the outcomes that are possible. Can a light bulb burn without failure for one thousand hours? For one thousand days? For one thousand years?

Often the outcome of a random experiment consists of one or more real measurements, and so \( S \) consists of all possible measurement sequences, a subset of \(\R^n\) for some \(n \in \N_+\). More generally, suppose that we have \(n\) experiments and that \( S_i \) is the set of outcomes for experiment \( i \in \{1, 2, \ldots, n\} \). Then the Cartesian product \(S_1 \times S_2 \times \cdots \times S_n\) is the natural set of outcomes for the compound experiment that consists of performing the \(n\) experiments in sequence. In particular, if we have a basic experiment with \(S\) as the set of outcomes, then \(S^n\) is the natural set of outcomes for the compound experiment that consists of \(n\) replications of the basic experiment. Similarly, if we have an infinite sequence of experiments and \( S_i \) is the set of outcomes for experiment \( i \in \N_+ \), then then \(S_1 \times S_2 \times \cdots\) is the natural set of outcomes for the compound experiment that consists of performing the given experiments in sequence. In particular, the set of outcomes for the compound experiment that consists of indefinite replications of a basic experiment is \(S^\infty = S \times S \times \cdots \). This is an essential special case, because (classical) probability theory is based on the idea of replicating a given experiment.

Consider again a random experiment with \( S \) as the set of outcomes. Certain subsets of \( S \) are referred to as events. Suppose that \( A \subseteq S \) is a given event, and that the experiment is run, resulting in outcome \( s \in S \).

Intuitively, you should think of an event as a meaningful statement about the experiment: every such statement translates into an event, namely the set of outcomes for which the statement is true.

Consider an experiment with \(S\) as the set of outcomes. The collection \(\ms S\) of events is required to be a \(\sigma\)-algebra of subsets of \(S\). That is,

The measurable space \((S, \ms S)\) is the sample space of the experiment.

So \(S\) itself is an event; by definition it always occurs. At the other extreme, the empty set \(\emptyset\) is also an event; by definition it never occurs. When \(S\) is uncountable then sometimes for technical reasons, not every subset of \(S\) can be allowed as an event, but the requirement that the collection of events be a \(\sigma\)-algebra ensures that new sets that are constructed in a reasonable way from given events, using the set operations, are themselves valid events. More importantly, \(\sigma\)-algebras are essential in probability theory as a way to describe the information that we have about an experiment.

Most of the sample spaces that occur in elementary probability fall into two general categories.

In (b) of , the mearuable subsets of \(\R^n\) include all of the sets encountered in calculus and in standard applications of probability theory, and many more besides. Typically \( S \) is a set defined by a finite number of equations or inequalities involving elementary functions.

The standard algebra of sets leads to a grammar for discussing random experiments and allows us to construct new events from given events. In the following results, suppose that \( S \) is the set of outcomes of a random experiment, and that \(A\) and \(B\) are events.

\(A \subseteq B\) if and only if the occurrence of \(A\) implies the occurrence of \(B\).

Recall that \( \subseteq \) is the subset relation. So by definition, \( A \subseteq B \) means that \( s \in A \) implies \( s \in B \).

\(A \cup B\) is the event that occurs if and only if \(A\) occurs or \(B\) occurs.

\(A \cap B\) is the event that occurs if and only if \(A\) occurs and \(B\) occurs.

Recall that \( A \cap B \) is the intersection of \( A \) and \( B \). By De Morgan's law, \(A \cap B = \left(A^c \cup B^c\right)^c\) and hence by , \(A \cap B \in \ms S\). By definiton, \( s \in A \cap B \) if and only if \( s \in A \) and \( s \in B \).

\(A\) and \(B\) are disjoint if and only if they are mutually exclusive; they cannot both occur on the same run of the experiment.

By definition, \( A \) and \( B \) disjoint means that \( A \cap B = \emptyset \).

\(A^c\) is the event that occurs if and only if \(A\) does not occur.

\(A \setminus B\) is the event that occurs if and only if \(A\) occurs and \(B\) does not occur.

\((A \cap B^c) \cup (B \cap A^c)\) is the event that occurs if and only if one but not both of the given events occurs.

Recall that \((A \cap B^c) \cup (B \cap A^c)\) is the symmetric difference of \(A\) and \(B\), and is sometimes denoted \(A \Delta B\). This event corresponds to exclusive or, as opposed to the ordinary union \( A \cup B \) which corresponds to inclusive or.

\((A \cap B) \cup (A^c \cap B^c)\) is the event that occurs if and only if both or neither of the given events occurs.

In the Venn diagram app, observe the diagram of each of the 16 events that can be constructed from \(A\) and \(B\).

Suppose now that \(\ms A = \{A_i: i \in I\}\) is a collection of events for the random experiment, where \(I\) is a countable index set.

\( \bigcup \ms A = \bigcup_{i \in I} A_i \) is the event that occurs if and only if at least one event in the collection occurs.

\( \bigcap \ms A = \bigcap_{i \in I} A_i \) is the event that occurs if and only if every event in the collection occurs:

By De Morgan's law, \(\bigcap_{i \in I} A_i = \left(\bigcup_{i \in I} A_i^c\right)^c\) and hence is an event by . By definition, \( s \in \bigcap_{i \in I} A_i \) if and only if \( s \in A_i \) for every \( i \in I \).

\(\ms A\) is a pairwise disjoint collection if and only if the events are mutually exclusive; at most one of the events could occur on a given run of the experiment.

By definition, \( A_i \cap A_j = \emptyset \) for distinct \( i, \, j \in I \).

Suppose now that \((A_1, A_2, \ldots\)) is an infinite sequence of events.

\(\bigcap_{n=1}^\infty \bigcup_{i=n}^\infty A_i\) is the event that occurs if and only if infinitely many of the given events occur. This event is sometimes called the limit superior of \((A_1, A_2, \ldots)\).

\(\bigcap_{n=1}^\infty \bigcup_{i=n}^\infty A_i\) is an event by and . Note that \( s \in \bigcap_{n=1}^\infty \bigcup_{i=n}^\infty A_i\) if and only if for every \( n \in \N_+ \) there exists \( i \in \N_+ \) with \( i \ge n \) such that \( s \in A_i \). In turn this means that \( s \in A_i \) for infinitely many \( i \in I \).

\(\bigcup_{n=1}^\infty \bigcap_{i=n}^\infty A_i\) is the event that occurs if and only if all but finitely many of the given events occur. This event is sometimes called the limit inferior of \((A_1, A_2, \ldots)\).

As before, \(\bigcup_{n=1}^\infty \bigcap_{i=n}^\infty A_i\) is an event by and . Note that \( s \in \bigcup_{n=1}^\infty \bigcap_{i=n}^\infty A_i\) if and only if there exists \( n \in \N_+ \) such that \( s \in A_i \) for every \( i \in \N_+ \) with \( i \ge n \). In turn, this means that \( s \in A_i \) for all but finitely many \( i \in I \).

Limit superiors and inferiors are discussed in more detail in the section on convergence.

Suppose again that we have a random experiment with sample space \((S, \ms S)\). Intuitively, a random variable is a measurement of interest in the context of the experiment. Simple examples include the number of heads when a coin is tossed several times, the sum of the scores when a pair of dice are thrown, the lifetime of a device subject to random stress, the weight of a person chosen from a population. Many more examples are given in exercises below. Mathematically, a random variable is a function defined on the set of outcomes.

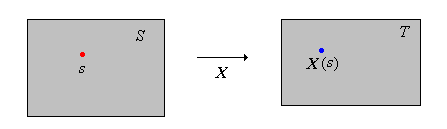

Suppose that \((T, \ms T)\) is a measurable space. A measurable function \( X \) from \( S \) into \( T \) is a random variable with values in \( T \).

So \(\ms T\) is a \(\sigma\)-algebra of subsets of \(T\) and hence satisfies the same set of axioms as in for the collection of events \(\ms S\). Typically, just like \((S, \ms S)\), the measurable space \((T, \ms T)\) is either discrete or a subspace of a Euclidean space as described in . Think of \(\ms T\) as the collection of admissible subsets of \(T\). The assumption that \(X\) is measurable ensures that meaningful statements involving \( X \) define events. Probability has its own notation, very different from other branches of mathematics. As a case in point, random variables, even though they are functions, are usually denoted by capital letters near the end of the alphabet. The use of a letter near the end of the alphabet is intended to emphasize the idea that the object is a variable in the context of the experiment. The use of a capital letter is intended to emphasize the fact that it is not an ordinary algebraic variable to which we can assign a specific value, but rather a random variable whose value is indeterminate until we run the experiment. Specifically, when we run the experiment an outcome \(s \in S\) occurs, and random variable \(X\) takes the value \(X(s) \in T\).

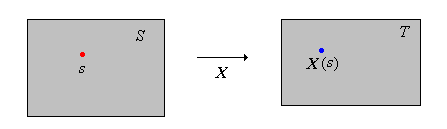

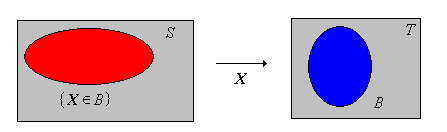

For \( A \in \ms T \), we use the notation \( \{X \in A\} \) for the inverse image \( \{s \in S: X(s) \in A\} \), rather than \( X^{-1}(A) \).

Again, the notation is more natural since we think of \( X \) as a variable in the experiment. The fact that \(X\) is measurable means precisely that \(\{X \in A\} \in \ms S\) for every \(A \in \ms T\). Think of \( \{X \in A\} \) as a statement about \( X \), which then translates into the event \( \{s \in S: X(s) \in A\} \)

Again, every statement about a random variable \( X \) with values in \( T \) translates into an inverse image of the form \( \{X \in A\} \) for some \( A \in \ms T \). So, for example, if \( x \in T \) (and under the natual assumption that \(\{x\} \in \ms T\)), \[\{X = x\} = \{X \in \{x\}\} = \left\{s \in S: X(s) = x\right\}\] If \(X\) is a real-valued random variable and \( a, \, b \in \R \) with \( a \lt b \) then \[\{ a \leq X \leq b\} = \left\{ X \in [a, b]\right\} = \{s \in S: a \leq X(s) \leq b\}\]

Suppose that \(X\) is a random variable with values in \(T\), and that \(A, \, B \in \ms T\). Then

This is a restatement of the fact that inverse images of a function preserve the set operations; only the notation changes (and in fact is simpler).

As with a general function, part (a) of holds for the union of a countable collection of sets in \(\ms T\), and the result in part (b) holds for the intersection of a countable collection of sets in \(\ms T\). No new ideas are involved; only the notation is more complicated.

Often, a random variable takes values in a measurable subset \(T\) of \(\R^k\) for some \(k \in \N_+\), as described in . We might express such a random variable as \(\bs{X} = (X_1, X_2, \ldots, X_k)\) where \(X_i\) is a real-valued random variable for each \(i \in \{1, 2, \ldots, k\}\). In this case, we usually refer to \(\bs X\) as a random vector, to emphasize its higher-dimensional character. A random variable can have an even more complicated structure. For example, if the experiment is to select \(n\) objects from a population and record various real measurements for each object, then the outcome of the experiment is a vector of vectors: \(\bs X = (X_1, X_2, \ldots, X_n)\) where \(X_i\) is the vector of measurements for the \(i\)th object. There are other possibilities; a random variable could be an infinite sequence, or could be set-valued. Specific examples are given in the computational exercises below. However, the important point is simply that a random variable is a (measurable) function defined on the set of outcomes \(S\).

The outcome of the experiment itself can be thought of as a random variable. Specifically, let \(T = S\), \(\ms T = \ms S\), and let \(X\) denote the identify function on \(S\) so that \(X(s) = s\) for \(s \in S\). Then trivially \(X\) is a random variable, and the events that can be defined in terms of \(X\) are simply the original events of the experiment. That is, if \( A \) is an event then \( \{X \in A\} = A \). Conversely, every random variable effectively defines a new random experiment.

In the general setting above, a random variable \(X\) defines a new random experiment with \( T \) as the new set of outcomes and \(\ms T\) as the new collection of events.

In fact, often a random experiment is modeled by specifying the random variables of interest, in the language of the experiment. Then, a mathematical definition of the random variables specifies the sample space. A function (or transformation) of a random variable defines a new random variable.

Suppose that \( X \) is a random variable for the experiment with values in \(T\), as above, and that \((U, \ms U)\) is another measurable space. If \( g: T \to U \) is measurable then \( Y = g(X) \) is a random variable with values in \( U \).

The assumption that \(g\) is measurable ensures that \(Y = g(X)\) is a measurable function from \(S\) into \(U\), and hence is a valid random variable. Specifically, if \(A \in \ms U\) then by definition, \(g^{-1}(A) \in \ms T\) and hence \[\{Y \in A\} = \{g(X) \in A\} = \{X \in g^{-1}(A)\} \in \ms S\]

Note that, as functions, \( g(X) = g \circ X \), the composition of \( g \) with \( X \). But again, thinking of \( X \) and \( Y \) as variables in the context of the experiment, the notation \( Y = g(X) \) is much more natural.

For an event \(A\), the indicator function of \(A\) is called the indicator variable of \(A\).

The value of this random variables tells us whether or not \(A\) has occurred: \(\bs 1_A = 1\) if \(A\) occurs and \(\bs 1_A = 0\) if \(A\) does not occur. That is, as a function on \( S \), \(\bs 1_A(s) = 1\) if \(s \in A\) and \(\bs 1_A(s) = 0\) if \(s \notin A\).

If \(X\) is a random variable that takes values 0 and 1, then \(X\) is the indicator variable of the event \(\{X = 1\}\).

Note that for \( s \in S \), \( X(s) = 1\) if \( s \in \{X = 1\} \) and \( X(s) = 0 \) otherwise.

Recall also that the set algebra of events translates into the arithmetic algebra of indicator variables.

Suppose that \(A\) and \(B\) are events.

Part (a) of extends to countable intersections, and part (b) extends to countable unions. If the event \( A \) has a complicated description, sometimes we use \( \bs 1 (A) \) for the indicator variable rather that \( \bs 1_A \).

Recall that probability theory is often illustrated using simple devices from games of chance: coins, dice, cards, spinners, urns with balls, and so forth. Examples based on such devices are pedagogically valuable because of their simplicity and conceptual clarity. On the other hand, remember that probability is not only about gambling and games of chance. Rather, try to see problems involving coins, dice, etc. as metaphors for more complex and realistic problems.

The basic coin experiment consists of tossing a coin \(n\) times and recording the sequence of scores \((X_1, X_2, \ldots, X_n)\) (where 1 denotes heads and 0 denotes tails).

This experiment is a generic example of \(n\) Bernoulli trials, and named for Jacob Bernoulli.

Consider the coin experiment with \(n = 4\), and Let \(Y\) denote the number of heads.

To simplify the notation, we represent outcomes as bit strings rather than ordered sequences.

In the simulation of the coin experiment, set \(n = 4\). Run the experiment 100 times and count the number of times that the event \(\{Y = 2\}\) occurs.

Now consider the general coin experiment with the coin tossed \(n\) times, and let \(Y\) denote the number of heads.

The basic dice experiment consists of throwing \(n\) distinct \(k\)-sided dice (with faces numbered from 1 to \(k\)) and recording the sequence of scores \((X_1, X_2, \ldots, X_n)\).

This experiment is a generic example of \(n\) multinoomial trials. The special case \(k = 6\) corresponds to standard dice.

Consider the dice experiment with \(n = 2\) standard dice. Let \(S\) denote the set of outcomes, \(A\) the event that the first die score is 1, and \(B\) the event that the sum of the scores is 7. Give each of the following events in the form indicated:

In the simulation of the dice experiment, set \(n = 2\). Run the experiment 100 times and count the number of times each event in the previous exercise occurs.

Consider the dice experiment with \(n = 2\) standard dice, and let \( S \) denote the set of outcomes, \(Y\) the sum of the scores, \(U\) the minimum score, and \(V\) the maximum score.

Note that \( S = \{1, 2, 3, 4, 5, 6\}^2 \). The following functions are defined on \( S \).

Consider again the dice experiment with \(n = 2\) standard dice, and let \( S \) denote the set of outcomes, \(Y\) the sum of the scores, \(U\) the minimum score, and \(V\) the maximum score. Give each of the following as subsets of \( S \), in list form.

In the dice experiment, set \(n = 2\). Run the experiment 100 times. Count the number of times each event in the previous exercise occurred.

In the general dice experiment with \(n\) distinct \(k\)-sided dice, let \(Y\) denote the sum of the scores, \(U\) the minimum score, and \(V\) the maximum score.

The set of outcomes of a random experiment depends of course on what information is recorded. The following exercise is an illustration.

An experiment consists of throwing a pair of standard dice repeatedly until the sum of the two scores is either 5 or 7. Let \(A\) denote the event that the sum is 5 rather than 7 on the final throw.

Let \(D_5 = \{(1,4), (2,3), (3,2), (4,1)\}\), \(D_7 = \{(1,6), (2,5), (3,4), (4,3), (5,2), (6,1)\}\), \(D = D_5 \cup D_7 \), and \( C = D^c \)

Experiments of the type in arise in the casino game craps. Note the simplicity of part (b) compared to part (a).

Suppose that 3 standard dice are rolled and the sequence of scores \((X_1, X_2, X_3)\) is recorded. A person pays $1 to play. If some of the dice come up 6, then the player receives her $1 back, plus $1 for each 6. Otherwise she loses her $1. Let \(W\) denote the person's net winnings.

The game in is known as chuck-a-luck.

Play the chuck-a-luck experiment a few times and see how you do.

In the die-coin experiment, a standard die is rolled and then a coin is tossed the number of times shown on the die. The sequence of coin scores \(\bs X\) is recorded (0 for tails, 1 for heads). Let \(N\) denote the die score and \(Y\) the number of heads.

Run the simulation of the die-coin experiment 10 times. For each run, give the values of the random variables \(\bs X\), \(N\), and \(Y\) of the previous exercise. Count the number of times the event \(A\) occurs.

In the coin-die experiment, we have a coin and two distinct dice, say one red and one green. First the coin is tossed, and then if the result is heads the red die is thrown, while if the result is tails the green die is thrown. The coin score \(X\) and the score of the chosen die \(Y\) are recorded. Suppose now that the red die is a standard 6-sided die, and the green die a 4-sided die.

Run the coin-die experiment 100 times, with various types of dice.

The die-coin experiment and the coin-die experiment are examples of experiments that occur in two stages, where the result of the second stage is infuencned by the result of the first stage.

Recall that many random experiments can be thought of as sampling experiments. For the general finite sampling model, we start with a population \(D\) with \(m\) (distinct) objects. We select a sample of \(n\) objects from the population. If the sampling is done in a random way, then we have a random experiment with the sample as the basic outcome. Thus, the set of outcomes \(S\) of the experiment is literally the set of samples; this is the historical origin of the term sample space. There are four common types of sampling from a finite population, based on the criteria of order and replacement. Recall the following facts from combinatorial structures:

Samples of size \( n \) chosen from a population with \( m \) elements.

If we sample with replacement, the sample size \(n\) can be any positive integer. If we sample without replacement, the sample size cannot exceed the population size, so we must have \(n \in \{1, 2, \ldots, m\}\).

The basic coin experiment and the basic dice experiment are examples of sampling with replacement. If we toss a coin \(n\) times and record the sequence of scores (where as usual, 0 denotes tails and 1 denotes heads), then we generate an ordered sample of size \(n\) with replacement from the population \(\{0, 1\}\). If we throw \(n\) (distinct) standard dice and record the sequence of scores, then we generate an ordered sample of size \(n\) with replacement from the population \(\{1, 2, 3, 4, 5, 6\}\).

Suppose that the sampling is without replacement (the most common case). If we record the ordered sample \(\bs X = (X_1, X_2, \ldots, X_n)\), then the unordered sample \(\bs W = \{X_1, X_2, \ldots, X_n\}\) is a random variable (that is, a function of \(\bs X\)). On the other hand, if we just record the unordered sample \(\bs W\) in the first place, then we cannot recover the ordered sample. Note also that the number of ordered samples of size \(n\) is simply \(n!\) times the number of unordered samples of size \(n\). No such simple relationship exists when the sampling is with replacement. This will turn out to be an important point when we study probability models based on random samples.

Consider a sample of size \(n = 3\) chosen without replacement from the population \(\{a, b, c, d, e\}\).

Traditionally in probability theory, an urn containing balls is often used as a metaphor for a finite population.

Suppose that an urn contains 50 (distinct) balls. A sample of 10 balls is selected from the urn. Find the number of samples in each of the following cases:

Suppose again that we have a population \(D\) with \(m\) (distinct) objects, but suppose now that each object is one of two types—either type 1 or type 0. Such populations are said to be dichotomous. Here are some specific examples:

Suppose that the population \(D\) has \(r\) type 1 objects and hence \(m - r\) type 0 objects. Of course, we must have \(r \in \{0, 1, \ldots, m\}\). Now suppose that we select a sample of size \(n\) without replacement from the population. Note that this model has three parameters: the population size \(m\), the number of type 1 objects in the population \(r\), and the sample size \(n\).

Let \(Y\) denote the number of type 1 objects in the sample. Then

A batch of 50 components consists of 40 good components and 10 defective components. A sample of 5 components is selected, without replacement. Let \(Y\) denote the number of defectives in the sample.

Run the simulation of the ball and urn experiment 100 times for the parameter values in the last exercise: \( m = 50 \), \( r = 10 \), \( n = 5 \). Note the values of the random variable \(Y\).

Recall that a standard card deck can be modeled by the Cartesian product set \[D = \{1, 2, 3, 4, 5, 6, 7, 8, 9, 10, j, q, k\} \times \{\clubsuit, \diamondsuit, \heartsuit, \spadesuit\}\] where the first coordinate encodes the denomination or kind (ace, 2–10, jack, queen, king) and where the second coordinate encodes the suit (clubs, diamonds, hearts, spades). Sometimes we represent a card as a string rather than an ordered pair (for example \(q \heartsuit\) rather than \((q, \heartsuit)\) for the queen of hearts).

Most card games involve sampling without replacement from the deck \(D\), which plays the role of the population, as described above. Thus, the basic card experiment consists of dealing \(n\) cards from a standard deck without replacement; in this special context, the sample of cards is often referred to as a hand. Just as in the general sampling model, if we record the ordered hand \(\bs X = (X_1, X_2, \ldots, X_n)\), then the unordered hand \(\bs W = \{X_1, X_2, \ldots, X_n\}\) is a random variable (that is, a function of \(\bs X\)). On the other hand, if we just record the unordered hand \(\bs W\) in the first place, then we cannot recover the ordered hand. Finally, recall that in this text, \(n = 5\) is the poker experiment and \(n = 13\) is the bridge experiment. The game of poker is treated in more detail in the chapter on games of chance.

Suppose that a single card is dealt from a standard deck. Let \(Q\) denote the event that the card is a queen and \(H\) the event that the card is a heart. Give each of the following events in list form:

In the card experiment, set \(n = 1\). Run the experiment 100 times and count the number of times each event in the previous exercise occurs.

Suppose that two cards are dealt from a standard deck and the sequence of cards recorded. Let \( S \) denote the set of outcomes, and let \(Q_i\) denote the event that the \(i\)th card is a queen and \(H_i\) the event that the \(i\)th card is a heart for \(i \in \{1, 2\}\). Find the number of outcomes in each of the following events:

Consider the general card experiment in which \(n\) cards are dealt from a standard deck, and the ordered hand \(\bs X\) is recorded.

Consider the bridge experiment of dealing 13 cards from a deck and recording the unordered hand. In the most common honor point count system, an ace is worth 4 points, a king 3 points, a queen 2 points, and a jack 1 point. The other cards are worth 0 points. Let \( S \) denote the set of outcomes of the experiment and \(V\) the honor-card value of the hand.

In the card experiment, set \(n = 13\) and run the experiment 100 times. For each run, compute the value of random variable \(V\) in exercise .

Consider the poker experiment of dealing 5 cards from a deck. Find the cardinality of each of the events below, as a subset of the set of unordered hands.

Run the poker experiment 1000 times. Note the number of times that the events \(A\), \(B\), and \(C\) in exercise occurred.

Consider the bridge experiment of dealing 13 cards from a standard deck. Let \( S \) denote the set of unordered hands, \(Y\) the number of hearts in the hand, and \(Z\) the number of queens in the hand.

In the experiments that we have considered so far, the sample spaces have all been discrete (so that the set of outcomes is finite or countably infinite). In this subsection, we consider Euclidean sample spaces where the set of outcomes \(S\) is continuous in a sense that we will make clear later. The experiments we consider are sometimes referred to as geometric models because they involve selecting a point at random from a Euclidean set.

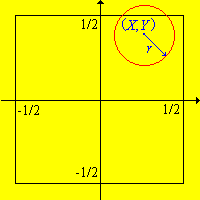

Buffon's coin experiment consists of tossing a coin with radius \(r \le \frac{1}{2}\) randomly on a floor covered with square tiles of side length 1. The coordinates \((X, Y)\) of the center of the coin are recorded relative to axes through the center of the square in which the coin lands.

In Buffon's coin experiment, let \( S \) denote the set of outcomes, \(A\) the event that the coin does not touch the sides of the square, and let \(Z\) denote the distance form the center of the coin to the center of the square.

Run Buffon's coin experiment 100 times with \(r = 0.2\). For each run, note whether event \(A\) occurs and compute the value of random variable \(Z\).

A point \((X, Y)\) is chosen at random in the circular region of radius 1 in \(\R^2\) centered at the origin. Let \(S\) denote the set of outcomes. Let \(A\) denote the event that the point is in the inscribed square region centered at the origin, with sides parallel to the coordinate axes. Let \(B\) denote the event that the point is in the inscribed square with vertices \((\pm 1, 0)\), \((0, \pm 1)\).

In the simple model of structural reliability, a system is composed of \(n\) components, each of which is either working or failed. The state of component \(i\) is an indicator random variable \(X_i\), where 1 means working and 0 means failure. Thus, \(\bs X = (X_1, X_2, \ldots, X_n)\) is a vector of indicator random variables that specifies the states of all of the components, and therefore the set of outcomes of the experiment is \(S = \{0, 1\}^n\). The system as a whole is also either working or failed, depending only on the states of the components and how the components are connected together. Thus, the state of the system is also an indicator random variable and is a function of \( \bs X \). The state of the system (working or failed) as a function of the states of the components is the structure function.

A series system is working if and only if each component is working. The state of the system is \[U = X_1 X_2 \cdots X_n = \min\left\{X_1, X_2, \ldots, X_n\right\}\]

A parallel system is working if and only if at least one component is working. The state of the system is \[V = 1 - \left(1 - X_1\right)\left(1 - X_2\right) \cdots \left(1 - X_n\right) = \max\left\{X_1, X_2, \ldots, X_n\right\}\]

More generally, a \(k\) out of \(n\) system is working if and only if at least \(k\) of the \(n\) components are working. Note that a parallel system is a 1 out of \(n\) system and a series system is an \(n\) out of \(n\) system. A \(k\) out of \(2 k\) system is a majority rules system.

The state of the \(k\) out of \(n\) system is \( U_{n,k} = \bs 1\left(\sum_{i=1}^n X_i \ge k\right) \). The structure function can also be expressed as a polynomial in the variables.

Explicitly give the state of the \(k\) out of 3 system, as a polynomial function of the component states \((X_1, X_2, X_3)\), for each \(k \in \{1, 2, 3\}\).

In some cases, the system can be represented as a graph or network. The edges represent the components and the vertices the connections between the components. The system functions if and only if there is a working path between two designated vertices, which we will denote by \(a\) and \(b\).

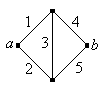

Find the state of the Wheatstone bridge network shown below, as a function of the component states. The network is named for Charles Wheatstone.

\(Y = X_3 (X_1 + X_2 - X_1 X_2)(X_4 + X_5 - X_4 X_5) + (1 - X_3)(X_1 X_ 4 + X_2 X_5 - X_1 X_2 X_4 X_5)\). Note that if component 3 works, then the system works if and only if component 1 or 2 works, and component 4 or 5 works. On the other hand, if component 3 does not work, then the system works if and only if components 1 and 4 work, or components 2 and 5 work.

Not every function \(u: \{0, 1\}^n \to \{0, 1\}\) makes sense as a structure function. Explain why the following properties might be desirable:

The model just discussed is a static model. We can extend it to a dynamic model by assuming that component \(i\) is initially working, but has a random time to failure \(T_i\), taking values in \([0, \infty)\), for each \(i \in \{1, 2, \ldots, n\}\). Thus, the basic outcome of the experiment is the random vector of failure times \((T_1, T_2, \ldots, T_n)\), and so the set of outcomes is \([0, \infty)^n\).

Consider the dynamic reliability model for a system with structure function \(u\) (valid in the sense of the previous exercise).

Suppose that we have two devices and that we record \((X, Y)\), where \(X\) is the failure time of device 1 and \(Y\) is the failure time of device 2. Both variables take values in the interval \([0, \infty)\), where the units are in hundreds of hours. Sketch each of the following events:

Recalll the previous discussion of genetics if you need to review some of the definitions in this subsection.

Recall first that the ABO blood type in humans is determined by three alleles: \(a\), \(b\), and \(o\). Furthermore, \(o\) is recessive and \(a\) and \(b\) are co-dominant.

Suppose that a person is chosen at random and his genotype is recorded. Give each of the following in list form.

Suppose next that pod color in certain type of pea plant is determined by a gene with two alleles: \(g\) for green and \(y\) for yellow, and that \(g\) is dominant.

Suppose that \(n\) (distinct) pea plants are collected and the sequence of pod color genotypes is recorded.

Next consider a sex-linked hereditary disorder in humans (such as colorblindness or hemophilia). Let \(h\) denote the healthy allele and \(d\) the defective allele for the gene linked to the disorder. Recall that \(d\) is recessive for women.

Suppose that \(n\) women are sampled and the sequence of genotypes is recorded.

The emission of elementary particles from a sample of radioactive material occurs in a random way. Suppose that the time of emission of the \(i\)th particle is a random variable \(T_i\) taking values in \((0, \infty)\). If we measure these arrival times, then basic outcome vector is \((T_1, T_2, \ldots)\) and so the set of outcomes is \(S = \{(t_1, t_2, \ldots): 0 \lt t_1 \lt t_2 \lt \cdots\}\).

Run the simulation of the gamma experiment in single-step mode for different values of the parameters. Observe the arrival times.

Now let \(N_t\) denote the number of emissions in the interval \((0, t]\). Then

Run the simulation of the Poisson experiment in single-step mode for different parameter values. Observe the arrivals in the specified time interval.

In the basic cicada experiment, a cicada in the Middle Tennessee area is captured and the following measurements recorded: body weight (in grams), wing length, wing width, and body length (in millimeters), species type, and gender. The cicada data set gives the results of 104 repetitions of this experiment.

For gender, let 0 denote female and 1 male, for species, let 1 denote tredecula, 2 tredecim, and 3 tredecassini.

In the basic M&M experiment, a bag of M&Ms (of a specified size) is purchased and the following measurements recorded: the number of red, green, blue, yellow, orange, and brown candies, and the net weight (in grams). The M&M data set gives the results of 30 repetitions of this experiment.